With the phase out of third-party cookies coming in the middle of Q4 2024, the urgency around identity solutions has reached a fever pitch among companies in the advertising ecosystem. Among ad tech companies, publishers, and advertisers, the user ID matching process is key to producing high-quality data for ad requests, reporting, and audience targeting. The value of building a graph of users, households, and devices is going to become more important than ever in maximizing value and generating the most relevant advertising. But capturing and linking all this data at scale has always been a significant technical challenge. The obvious choices of graph databases tended not to scale to the levels needed in ad tech, but the graph querying capabilities still need to be addressed. In 2022, we wrote a paper showing how we were able implement a scalable solution for identity graphs using HBase and Spark. Since that time, promising new technologies have emerged that offer the ability to broaden the capabilities of in-house identity graphs. Our early experiments with Aerospike Graph on AWS have been particularly promising.

Mapping an Identity Graph

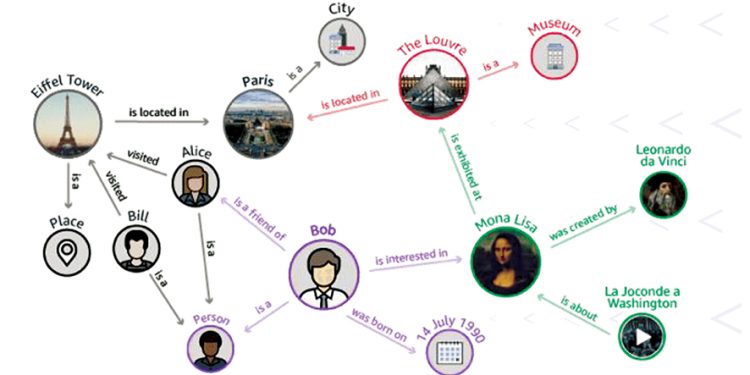

Maximizing value in digital advertising depends on getting the right message to the right people at the right time. In the ad tech world, much of this goal is accomplished with the ability to map first-party data, such as information known by a publisher about a particular reader, with anonymized behavioral or demographic information known to DSPs, SSPs, and other enrichers as well as third-party data providers. Matching these disparate data streams together gives ad tech companies the ability to produce compelling user profiles, and these profiles give publishers and advertisers the ability to provide a more relevant experience and achieve more effective results. In practice, every company in the chain is building an identity graph that maps users to relevant data, devices to users, users to households, and so forth.

On a technical level, this process means that companies need to match IDs from many sources such as publishers, DSPs, SSPs, and other data enrichers and merge them with their own data and data they may acquire from other sources. Ad tech companies need to not only capture user and device data but also make it queryable and actionable for things like determining buyer intent, optimizing yield, building segments, and facilitating richer reporting and attribution. This need makes it crucial to combine all collected ID pairs into a useful identity graph. An identity graph helps advertisers get a full picture of a user’s journey through a marketing funnel across multiple browsers, apps, and devices. For example, if a user looks for a product on his or her desktop computer, the advertiser is able to target that same user on his or her mobile phone, through email campaigns, or in social networks. So, this set of user IDs makes up an identity graph that stores the relationship between a user’s personal devices, browsers, IP addresses, or household devices.

As a result, ad tech companies and publishers need to store and join many different data sources. When dealing with these data sources, the main challenge is that tracking signals may come from the same user multiple times per minute, with thousands to millions of users coming each second, producing at minimum terabytes of data each day. Without a carefully designed architecture, the data volume becomes so large that it is not feasible to run a query against even one month’s worth of data, let alone make decisions at scale.

Understanding the Technical Challenge

A user ID tracking system receives only pairs of IDs, not an entire set of IDs at once. For example, when a user logs into a website, the tracking system may need to map a site identifier to an email hash. Another may be a request to map a partner’s user ID to a company’s ID. Each of these pairs is separate, with each party or application offering only its own narrow identification of a user.

A tracking system’s main task is to build out the user/device graph, starting with a single pair of IDs and creating more complex relationships from there. The system evolves in real time, continually building out and enhancing the graph as well as answering queries on the fly. At the same time, the entire graph must be usable at any time for merging into reports and interpreting large batches. This means it is a key requirement to not only store and query data but also to handle hundreds of thousands of insertions per second, almost 24/7, all while other applications are using the system uninterrupted (doing full-scan data jobs or single key-value or range-key queries).

Our typical approach to handling ultrafast data queries at such scale has been to use databases with highly optimized key-value query capabilities, such as Aerospike or Redis. We have had a lot of success in particular using Aerospike in ad tech because it has demonstrated exceptional speed and recoverability as well as the ability to handle large data volumes with a relatively small cluster size. But the graph queries across the identity graph don’t lend themselves to such an architecture. At the same time, dedicated graph databases tend to work primarily on single node data volumes or on graphs that can reasonably be effectively sharded at design time, neither of which is practical in this use case.

Solving in the Real World

To take a concrete example, we had a client generating approximately a terabyte of tracking data each day. The cookie-based tracker alone produced more than ten thousand files per hour to analyze, and one of the partners supplied about 400 thousand files per hour. This data needed to be deduplicated, merged into a queryable graph, and used on a consistent basis. We built several proofs of concept using graph databases, key-value databases, and columnar databases and ran them through a series of tests to simulate ad tech workloads.

The fact that the dataset is a large graph suggests that graph databases would be an ideal choice for managing this task. For example, the built-in graph traversal features seem like an easy way to store IDs and their relationships and to quickly do multi-hop queries. For example, such functionality makes reaching an email hash through a browser cookie, or getting a mobile advertisement ID through an email hash, relatively simple to implement. The challenges of scale were clear, but the native graph query functionality was too promising to ignore.

However, we found the convenience of graph databases was outweighed by the challenges of scaling to the data sizes we needed. We experimented with Amazon Neptune, NebulaGraph, TigerGraph, and others, and we found that different graph solutions could store large amounts of data and make quick multi-hop ID queries. However, in ad tech, there is often a need to implement full table scans—for example, when a partner needs to join a company’s user or device data with its own and output a user intersection between the two systems. These wide queries proved problematic with all the graph databases we tried. In the end, we came to the conclusion that it would be easier to overlay certain graph query functionality on top of the database with better horizontal scalability than to attempt to incorporate horizontal scaling into a database with stronger graph capabilities.

Building our own graph

In the end, we elected to roll our own graph capabilities on top of Apache HBase deployed on Amazon EMR. By building custom indexes that store a predefined graph path in each record, we have been able to re-create the most important aspects of graph functionality needed for the ad tech use case. Not every graph capability we wanted was available to us, notably multi-hop lookups in arbitrary directions, such as from email hash to cookie and back. But the key functionality, such as from querying device ID to cookie to email hash, works well in a production capacity. Apache Spark jobs on top of this allowed us to run the full table scans in parallel.

Moreover, the Apache HBase solution scaled well. Our testing approach involved providing clean and prepared-for-insertion data of approximately 500 thousand ID pairs per second, each with different data retention requirements. (Different types of IDs have different lifecycles, so some IDs in the database needed to be stored for just a few weeks, but other types of IDs may need to be stored for multiple months.) More than 50 percent of the data consisted of duplicates that needed to be cleaned within a few minutes of insertion. The solution demonstrated response times in milliseconds even while full table scans were running. We have since incorporated similar architecture into production, and we have used this design approach in other high-scale projects, both inside and out of ad tech.

Seeing a New Possibility

Although we are proud of this solution and it has proven successful in the real world, it comes with inherent limitations. In particular, although we were able to maintain the very convenient graph abstraction over our datasets, we ultimately needed to hard-code precomputed query paths into Apache HBase indexes. This means that we need to know every type of graph query that will be done at scale at the time the indexes are built, and it means that the indexing burden grows exponentially with the number of query types. This is a standard limitation of solutions built on top of data pre-aggregation.

In the past year, Aerospike has released Aerospike Graph, which provides a convenient and familiar Gremlin Query Language interface on top of Aerospike core components. It leverages Apache TinkerPop to build graph computing capabilities on top of Aerospike’s engine. Aerospike Graph has the potential to dramatically simplify the custom indexing we have been implementing, tackling a majority of the use cases at higher speed and supporting the ability for ad hoc graph queries, which our existing implementations currently lack.

We have had a very good experience using Aerospike as a key-value store and have seen exceptional performance in dozens of deployments (as well as our own research). It seems highly promising to perform multi-hop queries over such a low-latency back end. We have run Apache Spark on top of Aerospike, though it is not clear if this part of the system is suitable for the new Aerospike product. It is research we are currently undertaking.

Conclusion

Like so much in ad tech, there is no single silver bullet for maintaining an identity graph, but it can be done cost-effectively using the right technologies for each function. By writing our own indexes on top of columnar data stores like Apache HBase, we have been able to create production-

ready identity graphs that handle millions of incoming identity pairs per second while simultaneously allowing batch processing of datasets.

The challenge of such homegrown solutions is that they require significant customization based on the specific kinds of queries needed. We are constantly refining the solution to optimize for various needs and have implemented similar ones on technologies like parquet files on Amazon S3. One area in particular we continue to think about is how to make the graph query capability more flexible without introducing too much complexity. The recent release from Aerospike shows promise in our early tests, and we will continue to explore this database further.

About Lineate:

Lineate is a leading implementer of cloud solutions, helping organizations scale their technology to manage vast data volumes and user bases. Our expertise in distributed systems along with our unique culture focused on knowledge breadth and creativity sets us apart in a competitive industry. With a global presence spanning seven countries, our client base is primarily in the United States and Western Europe.